) |

|

Apple's latest iPhone 16 series introduces a groundbreaking feature: Visual Intelligence, an AI-powered tool that promises to revolutionize user interactions with their surroundings. This innovative technology, announced on September 9th, operates similarly to Google Lens, offering users the ability to gain insights about objects and locations simply by taking a photograph. However, a key distinction lies in the processing, which occurs directly on the iPhone, unlike Google Lens' cloud-based processing. This on-device processing not only ensures faster response times but also prioritizes user privacy by eliminating the need to transmit images to external servers.

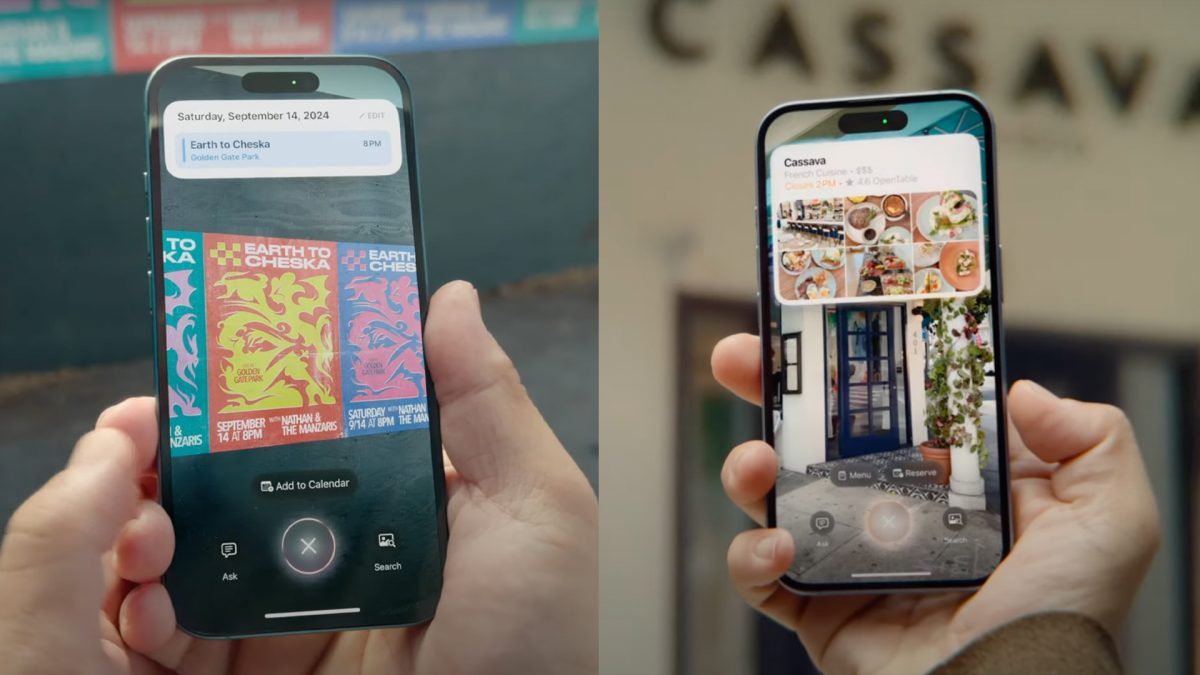

Visual Intelligence is an integral part of iOS 18's Apple Intelligence suite, designed to enhance user understanding of their environment in real-time. This feature operates based on multimodal AI principles, similar to those employed by Google and OpenAI. By pointing the iPhone camera at an object or location, users can instantly access relevant information. For instance, capturing a photograph of a restaurant can reveal its operating hours, user reviews, and menu options. Similarly, photographing an event flyer enables the feature to automatically extract key details such as the event title, date, and location.

Apple emphasizes that Visual Intelligence prioritizes user privacy by leveraging a combination of on-device processing and Apple services, ensuring that user images are not stored on external servers. Moreover, the feature seamlessly integrates with third-party models, allowing users to utilize it for diverse purposes such as searching for information on platforms like Google or obtaining assistance with study materials by photographing notes. This versatility expands the feature's applicability beyond its core functionality, making it a valuable tool across various user scenarios.